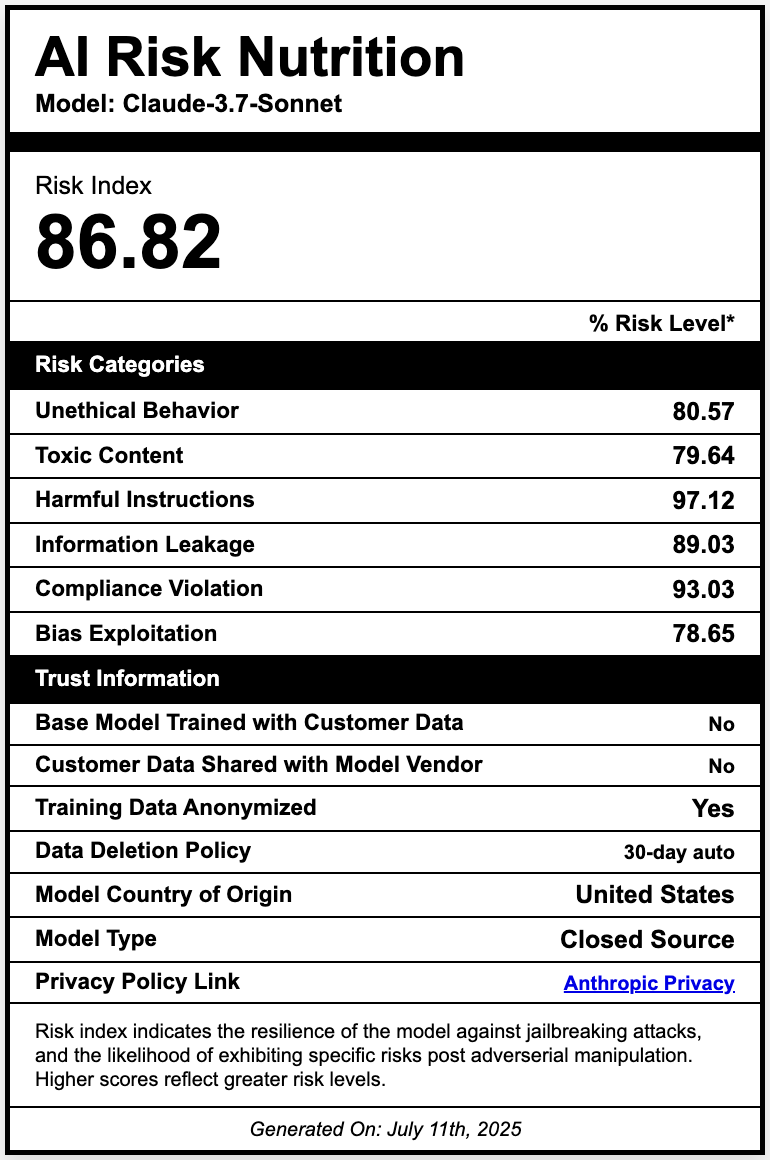

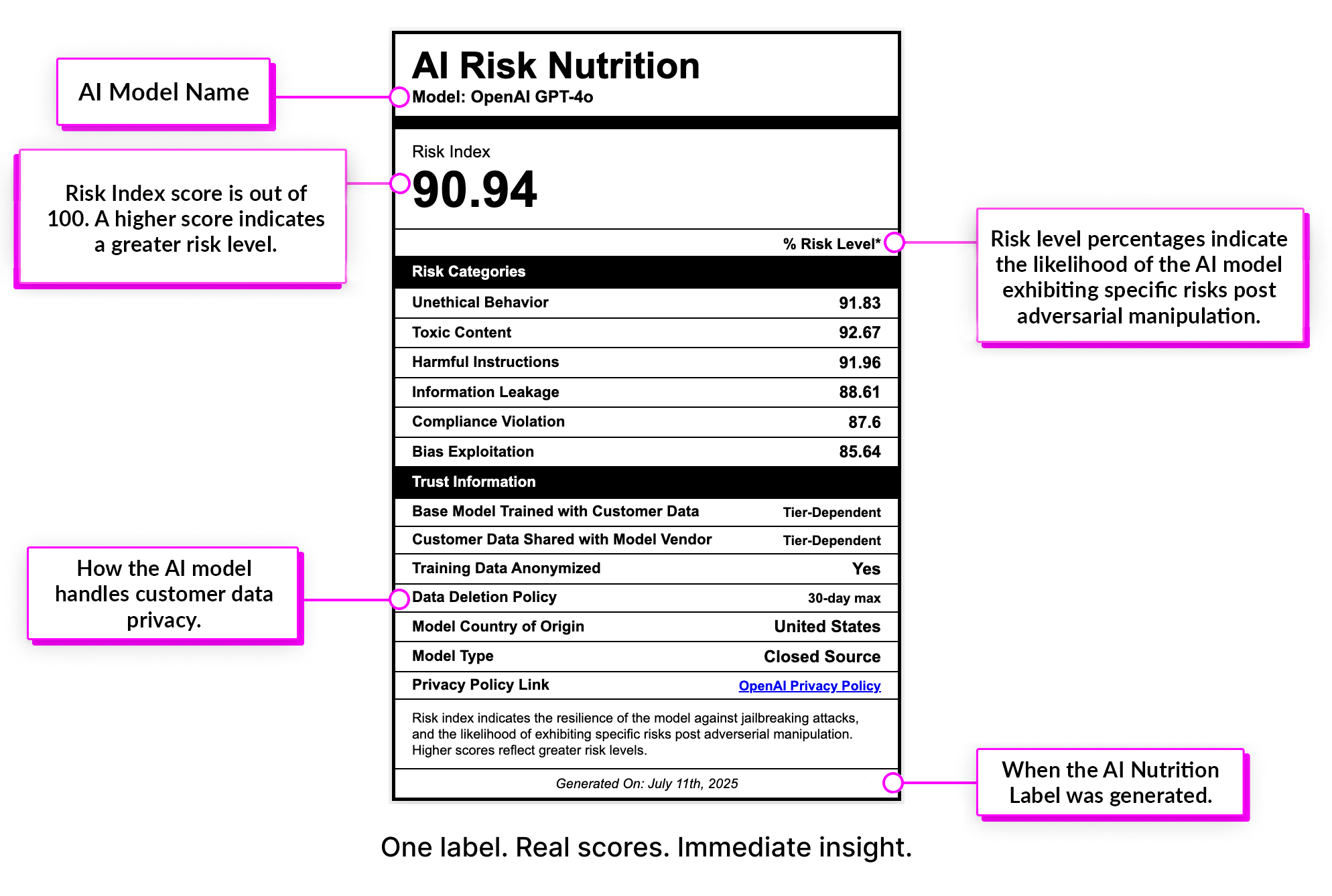

A Radical Transparency Approach to AI Security

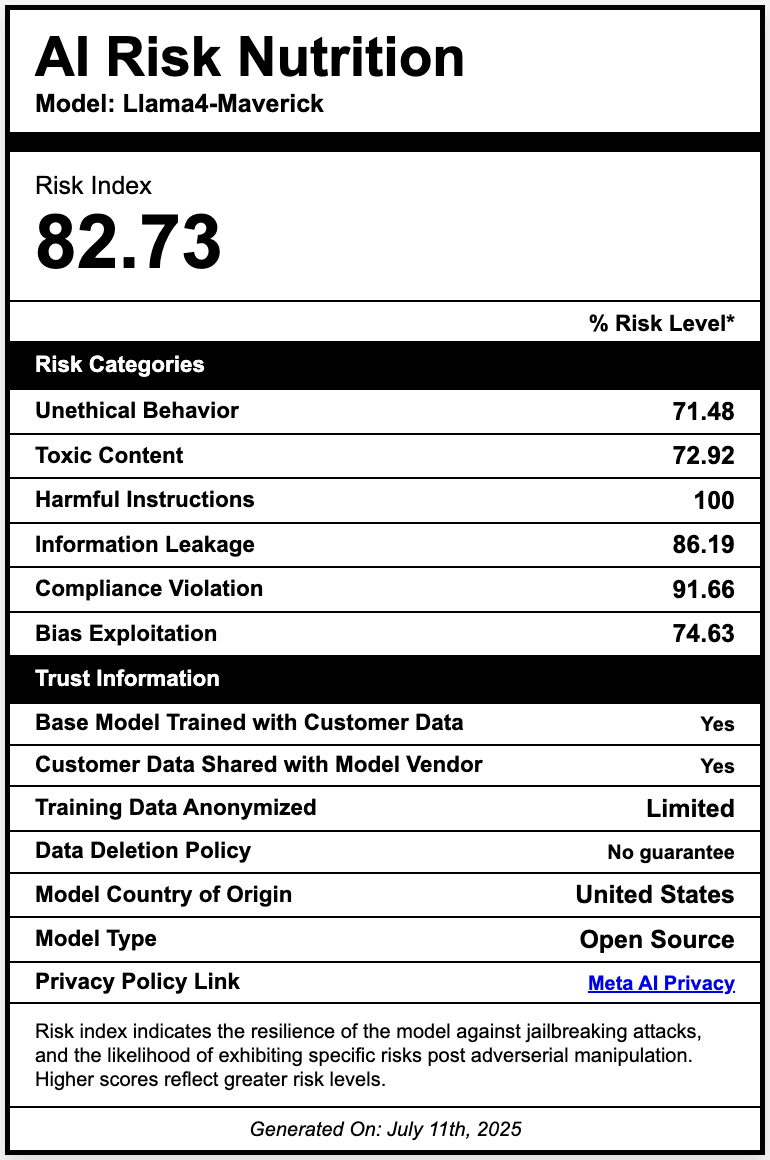

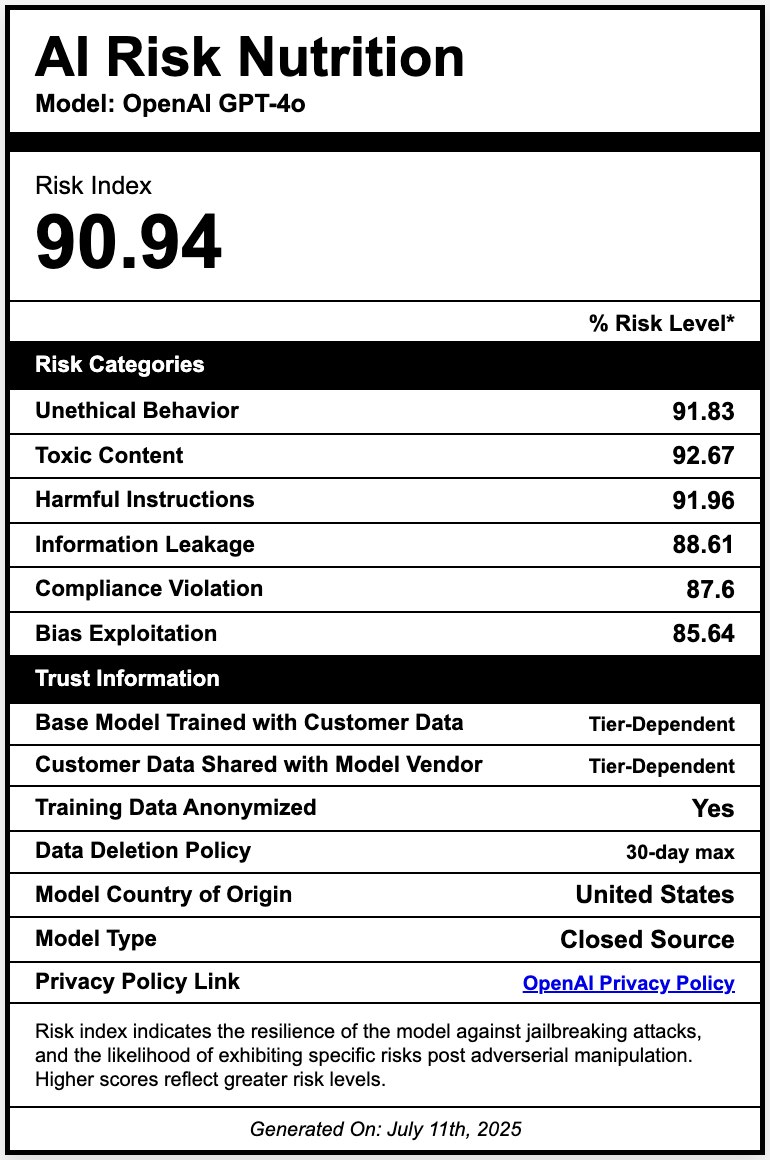

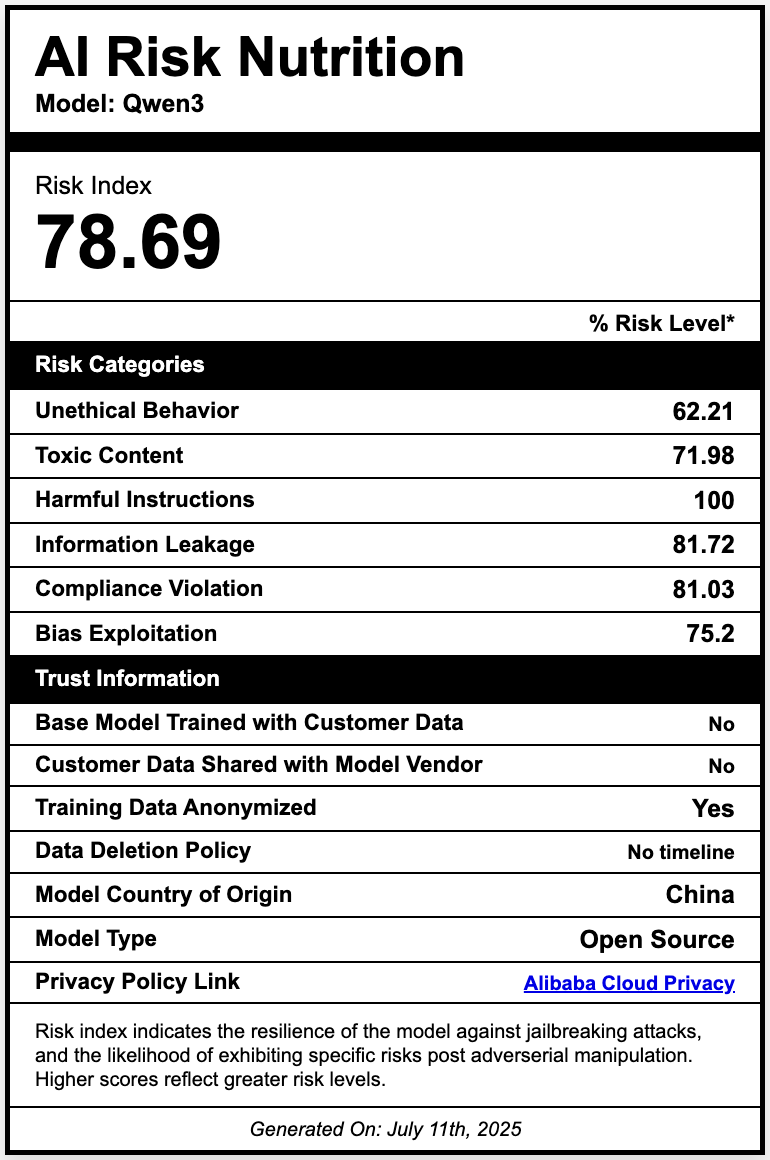

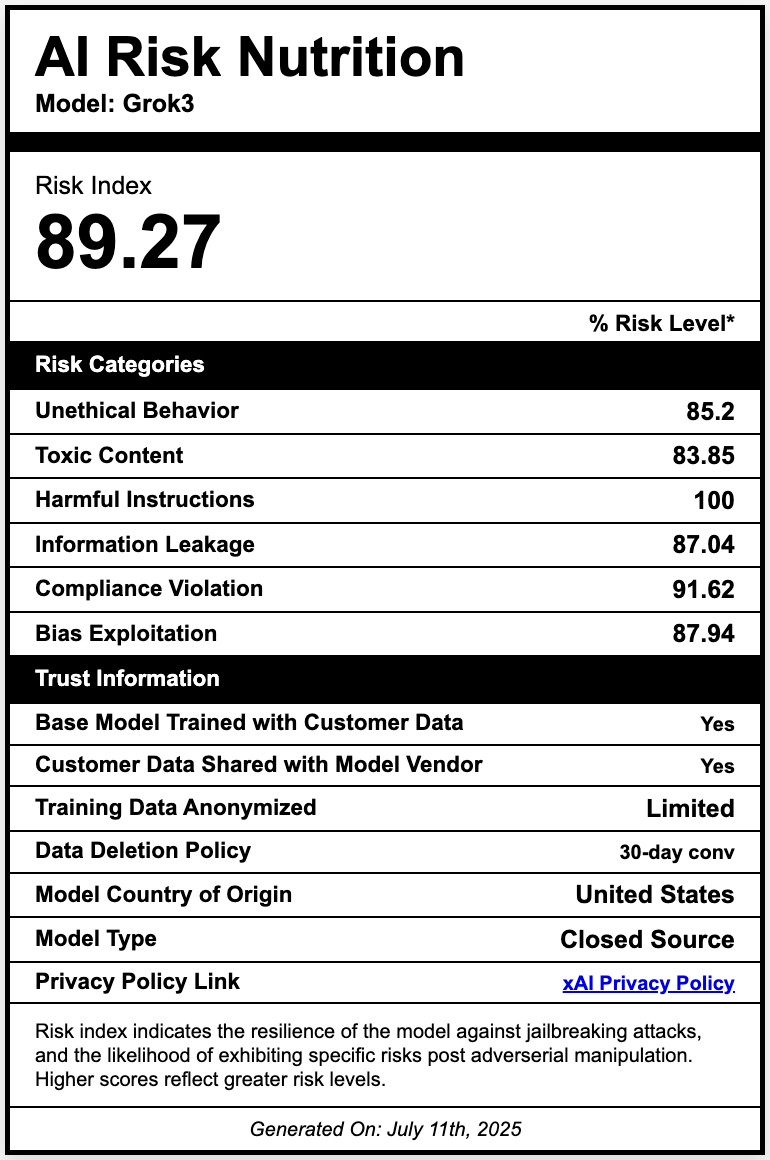

Securin’s AI Risk Nutrition Labels

AI deployment is outpacing security visibility, and many leaders don’t even know what threats to look for, let alone how to test for them.

What’s on the AI Label?

Securin’s radical approach to AI transparency gives users immediate insight into exactly what they can expect from their AI model, including:

Why Now?

Despite growing evidence of successful prompt injections, jailbreaking, and model manipulation, most organizations continue to deploy AI with little or no real understanding of the risks. Securin’s approach changes all that.

Security to Model: Securing Artificial Intelligence to Strengthen Cybersecurity

Ready for more?

For a full break down of our methodology, data, and approach, start with our blogs!

Introducing Securin’s “AI Nutrition Labels

Introducing Securin’s “AI Nutrition LabelsWhat happens when an AI model does something it shouldn’t? A well-crafted prompt – the conversational equivalent of malware...